Unmanned Aerial Vehicles (UAVs) traditionally rely on GPS technology for navigation, however, when GPS signals are jammed or unavailable, the UAVs face significant limitations to perform intended functions. This necessitates the development of alternative technologies that enable UAVs to navigate and operate effectively in such environments. This project explores the potential of these technologies to create a robust navigation system that operates without reliance on GPS, focusing on the integration of image processing and AI-based solutions for real-time localization and navigation.

Image-based navigation overcomes the limitations of both GPS and inertial systems, providing accurate and reliable solutions in GPS-contested environments. This project seeks to build on these advancements to develop a reliable navigation system for UAVs when GNSS signals are compromised.

Research, Application & Project

The primary objective of this project is to develop a prototype UAV navigation system capable of operating autonomously in GNSS-denied zones using image processing and AI technologies.

The necessary steps to achieve this objective are as follows: –

- Image Acquisition and Object Detection: Capture high-quality images of the environment using UAV-mounted cameras. Use AI models, such as YOLO, to detect and identify objects of interest in real-time. The detection process will enable the UAV to identify landmarks and obstacles essential for navigation.

- Real-World Coordinate Calculation: Derive the real-world coordinates of detected objects using camera parameters and advanced algorithms. Employ triangulation and projection techniques to map image data into real-world space, enabling precise localization of objects relative to the UAV.

- UAV Localization: Estimate the camera / UAV’s position in the environment by analyzing the spatial relationships between detected objects and their real-world coordinates. Integrate these calculations with onboard inertial sensors to enhance localization accuracy.

- Simulation: Validate the proposed system through initial simulations in Unity 3D to demonstrate feasibility.

- Hardware Implementation: Upon successful simulation, implement the system on actual UAV hardware to test its performance in real-world GNSS-denied scenarios.

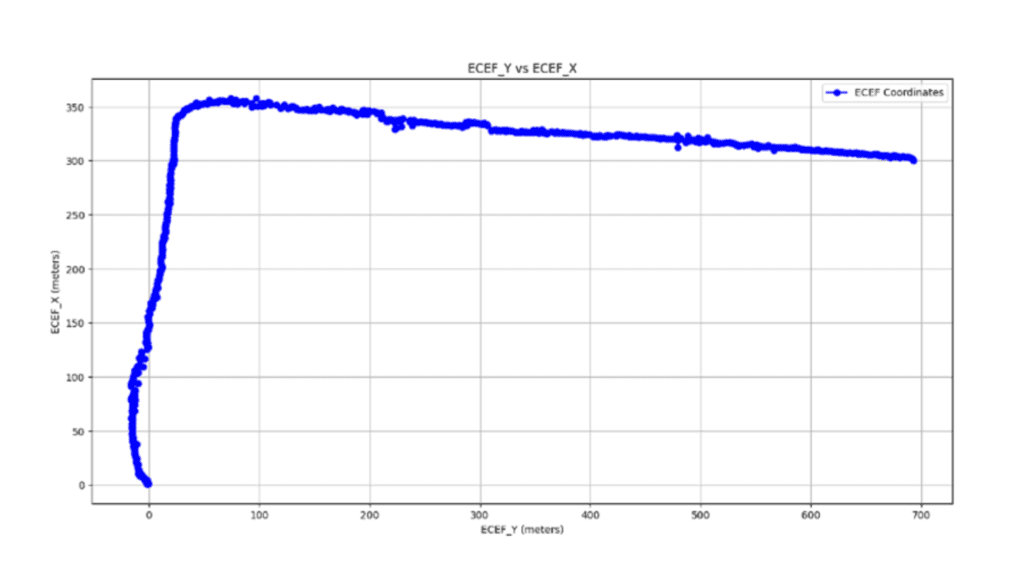

The drone was flown in a L shaped path and results obtained are:

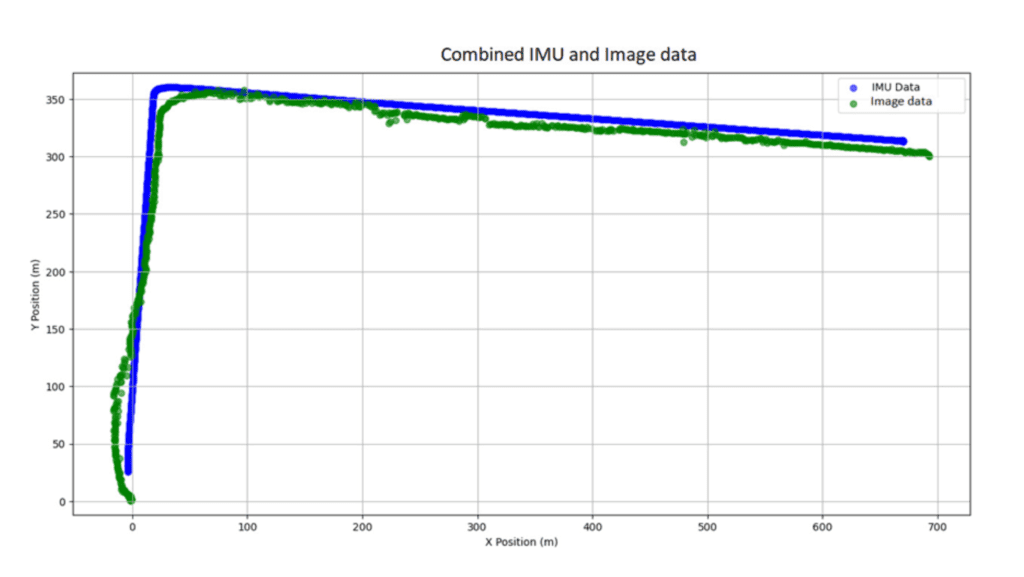

The position of the drone was compared using image output and IMU data:

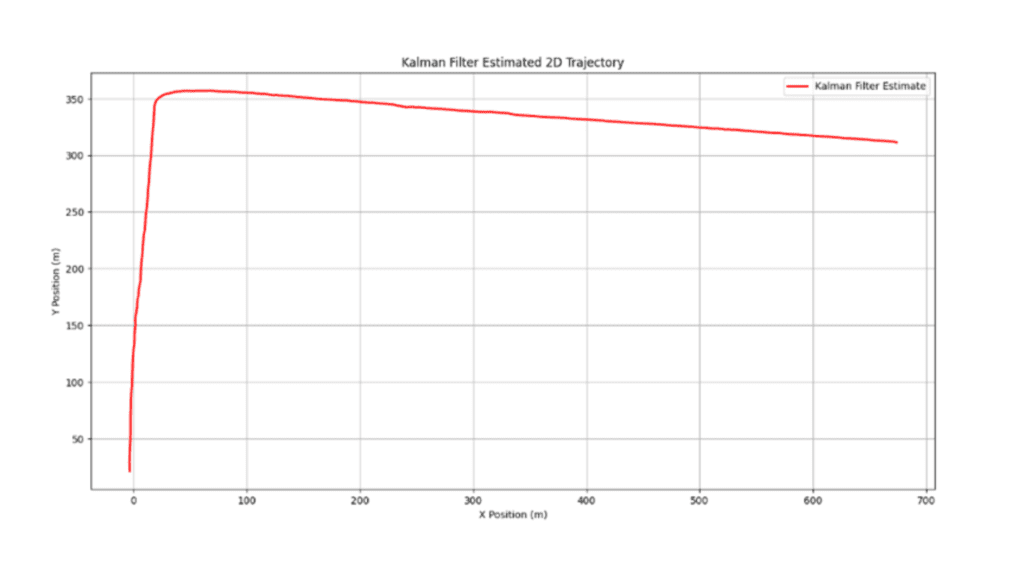

Successfully fused together using KF (Kalman Filter), as shown:

Thus, the error is only 5 meters while flying at a height of 500 meters, which is very encouraging. We are now working to develop a stand-alone vision-based navigation (VBN) system that will fuse GPS, IMU and camera data and provide position information for UAV navigation. It will operate even when GPS signal is jammed or not available.

After successfully validating the concept through initial simulations in Unity 3D, actual flights have been executed using a DJI drone to calculate the position of drone using image information only and fuse with IMU data. The results look encouraging and were compared with GPS coordinates.

OUR CONSULTANT

Contact us today to explore how ZATNav can revolutionize your training, technology, and business operations.